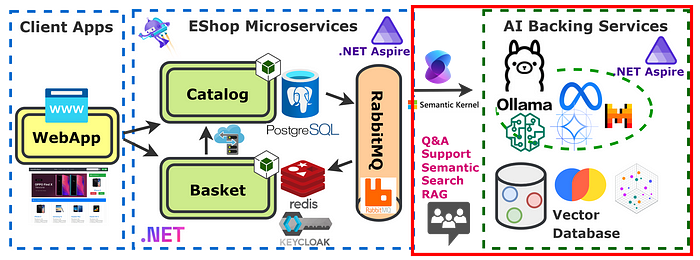

🚀 Chat AI Development with C# using Ollama & Llama3.2 Model orchestrate in .NET Aspire

We’re going to create Support Page for Chat AI with Using Ollama to run the Llama 3.2 model in a .NET application. We’ll integrate AI with .NET Aspire to orchestrate AI services efficiently and use Microsoft.Extensions.AI to build unified AI workflows.

Why Use Ollama and Llama 3.2 for AI Chatbots?

Ollama is a lightweight and efficient runtime for running large AI models on local hardware, reducing dependency on cloud-based APIs. Llama 3.2, developed by Meta, provides an optimized and powerful LLM that enables contextual, intelligent responses for chatbot applications.

Ollama and Llama Model Hosting Integration .NET Aspire

.NET Aspire is a cloud-native framework that simplifies microservices development and orchestration. It allows developers to efficiently manage AI workloads with built-in service discovery and containerized deployments.

EShop-distributed GitHub Source Code: https://github.com/mehmetozkaya/eshop-distributed

We’ll integrate Ollama — a hosting service for local LLMs like Llama — into our .NET Aspire solution. By referencing Ollama in AppHost and calling .AddModel(“llama3.2”), we enable microservices (e.g., Catalog) to run AI tasks (like Q&A, chat, embeddings) in a self-contained environment, orchestrated by .NET Aspire.

AppHost Install Package: CommunityToolkit.Aspire.Hosting.Ollama

This package extends .NET Aspire so we can call builder.AddOllama(“ollama”, 11434) to spin up a container or service hosting local LLM models (like llama3.2 or all-minilm).

AppHost Program.cs Adding Ollama & Llama Model

// Backing Services

..

var ollama = builder

.AddOllama("ollama", 11434)

.WithDataVolume()

.WithLifetime(ContainerLifetime.Persistent)

.WithOpenWebUI();

var llama = ollama.AddModel("llama3.2");

// Projects

var catalog = builder

.AddProject<Projects.Catalog>("catalog")

.WithReference(catalogDb)

.WithReference(rabbitmq)

.WithReference(llama)

.WaitFor(catalogDb)

.WaitFor(rabbitmq);

.WaitFor(llama);Here’s how we wire up Ollama:

- .AddOllama(“ollama”, 11434): Declares an Ollama resource named “ollama” on port 11434, that .NET Aspire assign.

- .WithDataVolume(): Keeps model data persistent, if running locally (like Docker on dev machine).

- .WithOpenWebUI(): Optionally expose a simple Ollama UI for exploration.

- .AddModel(“llama3.2”): download and sets up the Llama llm model into Ollama, so other microservices can get reference it.

To ensure Catalog can call the Llama model, we add:

- .WithReference(llama): Ties the microservice to the Llama model resource.

- .WaitFor(llama): Waits until the Ollama container with the Llama model is up before launching the Catalog service.

- This automatically injects environment variables like ConnectionStrings__ollama-llama3–2 with the URL Endpoint=http://localhost:11434;Model=llama3.2.

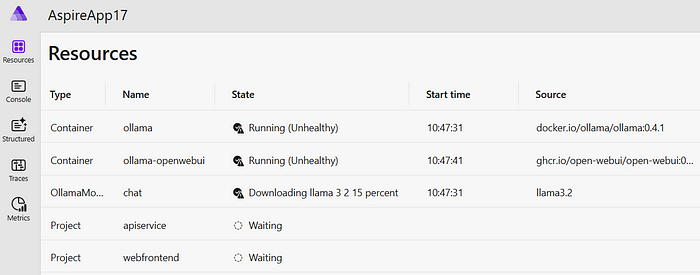

After that you can run .NET Aspire solution, NET Aspire orchestrates the service and microservice references. Ollama hosts local Large Language Models (LLMs) like Llama that means Ollama downloads the Llama model on initial startup within your .NET Aspire environment.

In microservices referencing llama, see ConnectionStrings__ollama-llama3–2

Example value: Endpoint=http://localhost:11434;Model=llama3.2

Aspire logs detail how the microservice calls Ollama

Ollama Client Integration .NET Aspire

We’ll show how to consume that service from the Catalog microservice, enabling tasks like semantic search or chat-based interactions with the LLM.

Installing the Client NuGet Package

dotnet add package CommunityToolkit.Aspire.OllamaSharpThis library simplifies calls to the Ollama service, automatically reading environment variables like ConnectionStrings__ollama-llama3–2. We will use Microsoft.Extensions.AI to register ChatClient object into DI of our Catalog microservices.

Microsoft.Extensions.AI–Unified AI Building Blocks for .NET

The Microsoft.Extensions.AI libraries act like a bridge between your .NET application and various AI services. These libraries let you build AI solutions with minimal concern for the underlying service provider — whether it’s Azure OpenAI, OpenAI, or a local Ollama installation.

Rather than coding directly against a vendor-specific API, you work with high-level interfaces (like IChatClient, IEmbeddingClient, etc.).

This ensures you can swap out an AI provider without massive rewrites.

Generative AI typically revolves around three major tasks:

- Chat for question-answering or conversation-based usage, such as retrieving data or summarizing content.

- Embedding generation to power vector search, letting you find semantic matches in large corpora.

- Tool calling to let AI “call out” to other services or APIs.

With Microsoft.Extensions.AI, you can code these features in a consistent manner across different LLM providers.

Example: IChatClient

IChatClient client =

environment.IsDevelopment

? new OllamaChatClient(…)

: new AzureAIInferenceChatClient(…);

var response = await client.GetResponseAsync(messages, options, cancellationToken);By referencing a chat interface, you can inject or instantiate one client class for local dev (like OllamaChatClient) and a different one (like AzureAIInferenceChatClient) for production. Both classes implement IChatClient, so your code that calls GetResponseAsync(…) remains unchanged.

Register OllamaSharpChatClient with Microsoft.Extensions.AI

The Microsoft.Extensions.AI library provides an abstraction over the Ollama client API, using generic interfaces. OllamaSharp supports these interfaces, and they can be registered using the AddOllamaSharpChatClient extension methods.

builder.AddOllamaSharpChatClient(“llama”);After adding IChatClient to the builder, you can get the IChatClient instance using dependency injection. For example, to retrieve your context object from service:

public class ExampleService(IChatClient chatClient)

{

// Use chat client…

}IChatClient and IEmbeddingGenerator comes from Microsoft.Extentions.AI in Catalog/Program.cs

using Microsoft.SemanticKernel;

var builder = WebApplication.CreateBuilder(args);

// Add services to the container.

builder.AddNpgsqlDbContext<ProductDbContext>("catalogdb");

builder.Services.AddScoped<ProductService>();

builder.Services.AddMassTransitWithAssemblies(Assembly.GetExecutingAssembly());

builder.AddOllamaSharpChatClient("ollama-llama3–2"); // ADDED

//builder.AddOllamaSharpEmbeddingGenerator("ollama-all-minilm");

//builder.Services.AddInMemoryVectorStoreRecordCollection<int, ProductVector>("products");

builder.Services.AddScoped<ProductAIService>();

builder.AddServiceDefaults();

var app = builder.Build();

..By this lines, we registered ChatClient object.

After adding IChatClient to the builder, you can get the IChatClient instance using dependency injection.

Develop ProductAIService.cs Class for Business Layer — Customer Support Chat AI

We’ll build a ProductAIService that serves as the business logic layer for AI-driven customer support chat and Q&A. And we’ll Inject and use IChatClient interfaces that we register into Program.cs to develop SupportAsync method for providing AI Chat for customer support use cases.

ProductAIService.cs

public class ProductAIService(

IChatClient chatClient)

{

public async Task<string> SupportAsync(string query)

{

var systemPrompt = “””

You are a useful assistant.

You always reply with a short and funny message.

If you do not know an answer, you say ‘I don’t know that.’

You only answer questions related to outdoor camping products.

For any other type of questions, explain to the user that you only answer outdoor camping products questions.

At the end, Offer one of our products: Hiking Poles-$24, Outdoor Rain Jacket-$12, Outdoor Backpack-$32, Camping Tent-$22

Do not store memory of the chat conversation.

“””;

var chatHistory = new List<ChatMessage>

{

new ChatMessage(ChatRole.System, systemPrompt),

new ChatMessage(ChatRole.User, query)

};

var resultPrompt = await chatClient.CompleteAsync(chatHistory);

return resultPrompt.Message.Contents[0].ToString()!;

}

}We inject IChatClient in the constructor for AI calls. SupportAsync receives a user query. We define a systemPrompt to instruct the AI how to respond (short, funny, product-specific, etc.). We build a chatHistory with a system role and user role messages. Finally, we call chatClient.CompleteAsync() to get the AI’s reply. Return the string to be served in an endpoint or minimal API.

System prompts are crucial for controlling model behavior:

- We keep the answer short, funny, and relevant only to outdoor camping products.

- If the user asks about something else (unrelated domain), we instruct the AI to politely refuse or mention it only knows about camping gear.

- We also mention a quick product suggestion to offer users toward a purchase.

Develop ProductEndpoints Support Query Endpoint for Chat Q&A

We’ll create a support endpoint in our Catalog microservice’s ProductEndpoints class. This endpoint injects the ProductAISearchService — which handles the AI chat logic — to return relevant answers to user questions about camping products.

ProductEndpoints.cs

group.MapGet(“/support/{query}”, async (string query, ProductAIService service) =>

{

var response = await service.SupportAsync(query);

return Results.Ok(response);

})

.WithName("Support")

.Produces(StatusCodes.Status200OK);- “/support/{query}”: The user provides their question in the URL path.

- ProductAIService service is automatically injected by ASP.NET minimal API DI.

- We await service.SupportAsync(query), returning the AI-generated string as a JSON or plain text response.

Blazor FrontEnd Support Page Development

we’ll create a Support page in the Blazor WebApp that integrates with the Catalog microservice’s AI-based support logic. The user types a question, we send it to /products/support/{query}, and display the AI response right in the Blazor UI.

CatalogApiClient.cs

public async Task<string> SupportProducts(string query)

{

var response = await httpClient.GetFromJsonAsync<string>($”/products/support/{query}”);

return response!;

}- We add SupportProducts(string query) in CatalogApiClient.cs, which sends a GET request to /products/support/{query}.

- The method returns the string response from the Catalog microservice.

That’s all we need for the Blazor front end to leverage AI chat logic from the Catalog service.

Creating the Support.razor Component

@page “/support”

@attribute [StreamRendering(true)]

@rendermode InteractiveServer

@inject CatalogApiClient CatalogApiClient

<PageTitle>Support</PageTitle>

<p>Ask questions about our amazing outdoor products that you can purchase.</p>

<div class="form-group">

<label for="query" class="form-label">Type your question:</label>

<div class="input-group mb-3">

<input type="text" id="query" class="form-control" @bind="queryTerm" placeholder="Enter your query…" />

<button id="btnSend" class="btn btn-primary" @onclick="DoSend" type="submit">Send</button>

</div>

<hr />

</div>

@if (response != null)

{

<p><em>@response</em></p>

}

@code {

private string queryTerm = default!;

private string response = default!;

private async Task DoSend(MouseEventArgs e)

{

response = "Loading..";

await Task.Delay(500);

response = await CatalogApiClient.SupportProducts(queryTerm);

}

}We inject CatalogApiClient, referencing the newly added SupportProducts method. The user enters text in queryTerm, then we call DoSend() on click, which sets response to the AI’s output.

EShop-distributed GitHub Source Code: https://github.com/mehmetozkaya/eshop-distributed

Query Flow

When the user hits Send:

- The Blazor front end calls SupportProducts(queryTerm) in CatalogApiClient.cs.

- That triggers a GET to /products/support/{query}.

- The Catalog microservice’s AI method (ProductAIService) returns a short, humorous reply.

- We display it in <p><em>@response</em></p>.

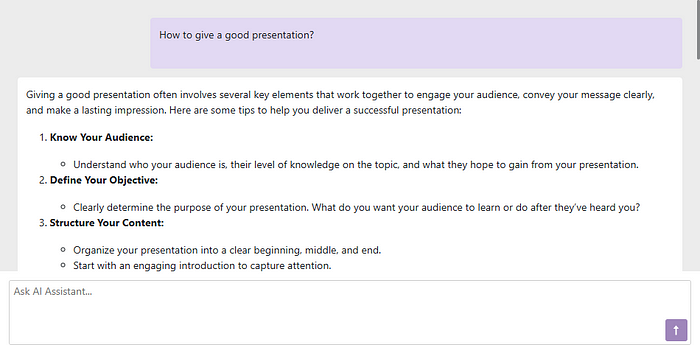

Test WebApp Blazor Support Page for Chat and Q&A

We’ll test our Blazor WebApp’s Support page, which calls the Catalog microservice for AI-driven Q&A.

- Run the entire solution (AppHost)

- Navigate to https://localhost:<port>/support

- Type something like “What tent do you recommend for heavy rain?”

Conclusion

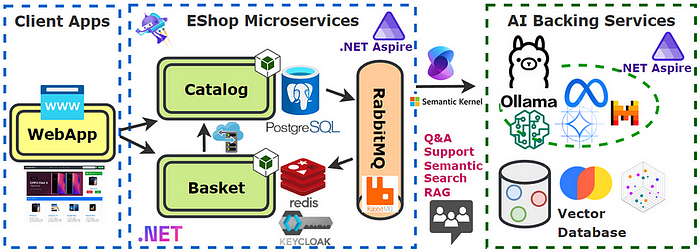

By combining Ollama, Llama 3.2, .NET Aspire, and Microsoft.Extensions.AI, developers can build scalable AI chatbots with efficient inference, cloud-native deployment, and unified AI integration.

Udemy Course: .NET Aspire and GenAI Develop Distributed Architectures — 2025

Develop AI-Powered Distributed Architectures using .NET Aspire and GenAI to develop EShop Catalog and Basket microservices integrate with Backing services including PostgreSQL, Redis, RabbitMQ, Keycloak, Ollama and Semantic Kernel to Create Intelligent E-Shop Solutions.

You will gain real-world experience, you will have a solid understanding of the .NET Aspire and .NET Generative AI to design, develop and deploy ai-powered distributed enterprise applications.